Stanford CS25: V3 I How I Learned to Stop Worrying and Love the Transformer

18 Jan 2024 (almost 2 years ago)

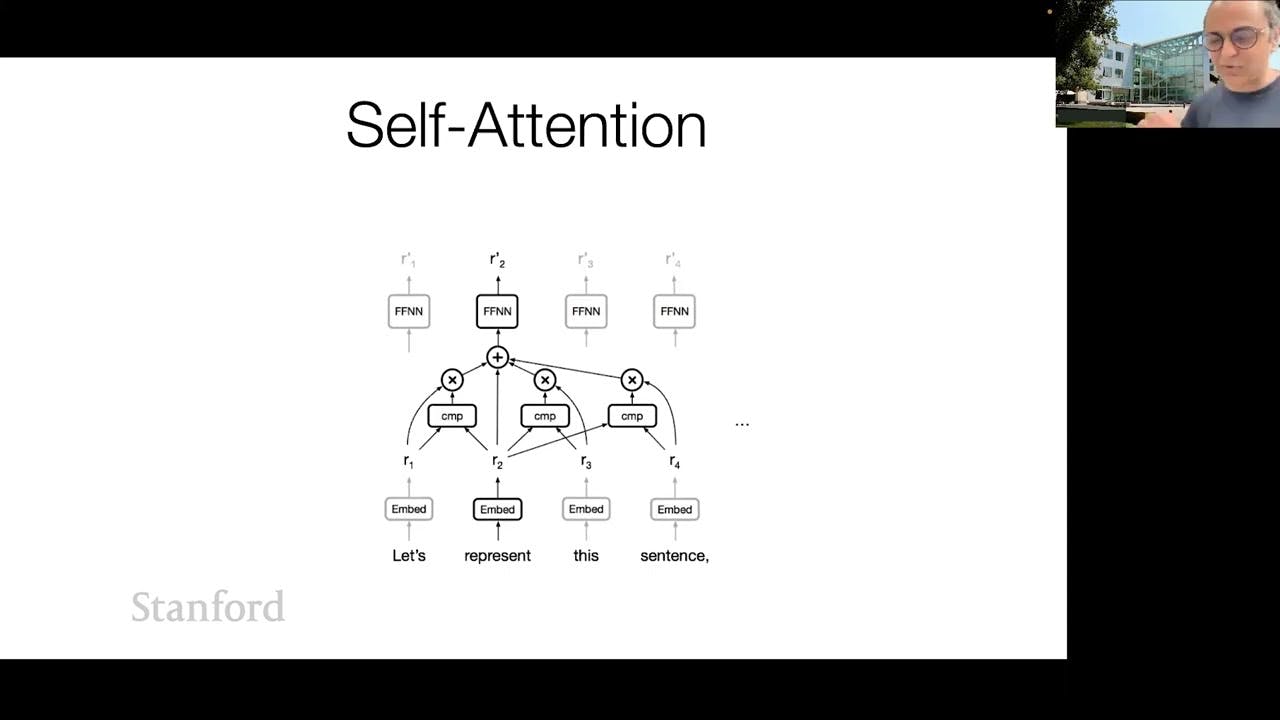

Improvements in the Transformer Model

- The need for position encoding in self-attention mechanisms to capture temporal relationships in sequences.

- Different approaches to position encoding, such as relative position embeddings and rotary position encodings.

- Challenges related to the computational efficiency and memory requirements of self-attention, and possible solutions such as local attention, sparse attention, and online computation of the softmax.

- The importance of architectural advancements like group query attention, which reduces activation memory, and speculative decoding, where a lighter model is first used to generate candidates that are ranked by a heavier model.

- The potential for further improvements in large language models, including better scaling laws, better memory management, and optimized precision.

- The possibility of using external tools and resources to enhance the capabilities of language models and facilitate human-machine collaboration.

Understanding Non-Autoregressive Generation

- Non-autoregressive generation involves narrowing down the set of all possible paths and not relying on a fixed order to generate words.

- Learning both the ordering and condition independencies in non-autoregressive generation is challenging.

- If an oracle provided the correct order of sentence generation, it would significantly improve non-autoregressive generation.

Model Capabilities and Real-World Generalization

- Language models can be used as planners in robotics, leveraging their knowledge about the world.

- Current models have the potential to extract a vast amount of information from text.

- Large language models have limitations in generalizing beyond their training data, and understanding text representation limits our knowledge of what is possible.

Challenges in Agent Coordination

- Goal decomposition, coordination, and verification are fundamental challenges in making multiple systems work together.

- Modular systems and communication between specialized agents are promising approaches to coordination.

- Gradient descent architectures and modular systems have potential, but progress has been slow.

Conditional Independence in Latent Space

- The assumption in generative models is that the outputs are conditionally independent.

- Latent space and vector quantization can model the conditional dependence in generation.

- Discrepancies in mode representation and practical speed render this approach less effective.

Prospects in Human-Computer Interaction

- Human-computer interaction can benefit from the interaction of advanced models and user feedback.

- Diverse ideas and people pursuing important directions can further advance deep learning and product development.

- There is ample room for innovation in building companies and products that leverage large language models.

Current Work on Building Data Workflows

- The speaker mentions their current work on building models that automate data workflows and improve analysis and decision-making processes within companies.

- They emphasize the need for a full-stack approach and believe that tools and external resources can play an important role in enhancing the capabilities of language models.